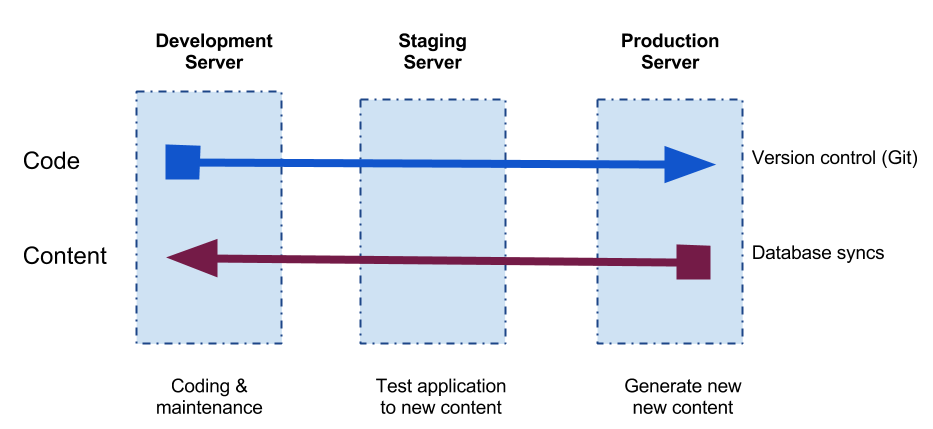

The Flow of Content & Code

on

There are lots of ways to set up a enterprise server environment for Drupal, but in dealing with IT folks who are coming from other Content Management Systems (CMS) or worse static sites, there is an asumption that for an organization to have control, that they need to have a completely isolated server.

There are lots of ways to set up a enterprise server environment for Drupal, but in dealing with IT folks who are coming from other Content Management Systems (CMS) or worse static sites, there is an asumption that for an organization to have control, that they need to have a completely isolated server.

Many organizations historically have not had a CMS which had the workflow structure and level of interactivity that Drupal comes with. Historically, the staging server has been used as the final Quality Assurance (QA) environment for new content. It was also used as a barrier between the internal network and the public Internet site.

There are rare cases where an agency might actually need to pre-approve the content and migrate it over to a DMZ, and fortunately the Deployment module can help with that. Government departments are using this and it can be pretty painless if there's a "clear line of site", as the content can simply be transferred using JSON. There is also the need of exporting configuration into code so it can be managed by version control software. This can be done with the Configuration Management or possibly Patterns. With Drupal 8 Core much of the configuration stored as YAML files so this will be easier.

However, just because it can be done, doesn't mean that this is a best practice for either organization workflow or security.

Code changes definitely need to move from the development, to staging, to production servers. This can be done easily by using modern version control tools like Git. There are always going to be security upgrades to Drupal Core and modules. Active sites will also want to be adjusting the theme or adding new modules over time to ensure that it is able to keep up with the needs of the content creators and site visitors. All of this needs to be tracked carefully.

The live database needs to be pulled down from the production server to staging and then the changes to code are pushed to the master repository and then pulled to the staging server. At that point database updates may be required from the new code and one should have replicated the result for the live site.

This is the stage to evaluate the site to see if anything has been broken. Drupal is very modular, and some module upgrades may have impacts that aren't anticipated. There are extensive Unit Tests available for Core and for many contributed modules that allow you to automate the testing of core functionality; This should happen on the Development server. There is also a lot of work around site specific automated browser tests through Selenium (there is also a Selenium Drupal module available with a number of built in tests). Websites are complex enough these days that simply walking through a few key pages often isn't good enough. Automated browser testing should be done on both the staging and production server.

When you're confident that the new code & new content all work as expected, then repeat the steps you've done earlier on staging onto the testing server.

There are many, many ways to manage workflow within Drupal (Workflow, Workbench, Revisioning, Maestro, etc.). For most websites, Drupal provides enough functionality to content authors for organizations who need to have tight control of what content appears on their site.

Having the content be authored anywhere other than the live site is ultimately going to present a lot of limitations and complications. Any user generated feedback would be lost, plus any data that is aggregated from external websites would need to be brought in multiple times.

Administering the content in Drupal requires access to a secure HTTPS environment to ensure that passwords & content are securely transferred to the server. It would make sense to restrict access to more sensitive sites to a set list of IP addresses, but there's already a module for that. There is no additional access that is required. Even if access to LDAP or Active Directory required from the webserver, if that server is properly set up, there should be no security problems even if the server is compromised.

People most often use security as the reason to have the content generated somewhere other than the production server. However, if proper security practices are in place, there are only marginal security improvements by isolating the server. Having both Drupal & the server environment set up and maintained properly for security are going to address all of the problems that a website is ever likely to encounter.

This certainly requires that security is resourced properly, but there are great tools available to simplify intrusion detection & to alert administrators early about problems. This is work that really needs to be done on any site that is sitting on the Internet however, as not properly managing security endagers not only the brand of the organization, but in fact can threaten its users.

I'd really like to get feedback on this, particularly from government departments that are using Drupal. Where do people manage their content?

Share this article

About The Author

Mike Gifford is the founder of OpenConcept Consulting Inc, which he started in 1999. Since then, he has been particularly active in developing and extending open source content management systems to allow people to get closer to their content. Before starting OpenConcept, Mike had worked for a number of national NGOs including Oxfam Canada and Friends of the Earth.